The dictionary definition of feminism is “the theory of the political, economic, and social equality of the sexes.” I agree with this definition however I feel that it is missing clarification that feminism, although it may have began as such, is not limited to striving for equality purely based on gender. Feminism spans beyond equality for just the sexes, but for everyone, regardless of race, sexuality, or social class. It is about advocating for the rights for all, but especially the minorities that have been silenced over the course of history. Men, overwhelmingly but not limited to those who are white and cisgender, are at the forefront of society and are often unnecessarily brought into accomplishments made by women, poc, or the lgbtq community. A barrier that I would like to see broken is how women are often pitted against each other, especially in the entertainment industry and on social media. As women, we are supposed to be lifting each other up rather than focusing on who may be more successful. Also, I think if we focused more on educating those who are wrong in the way they understand feminism rather than trying to tear them down, it wouldn’t get such a bad rap and more people would see that it is a movement that can benefit us all.

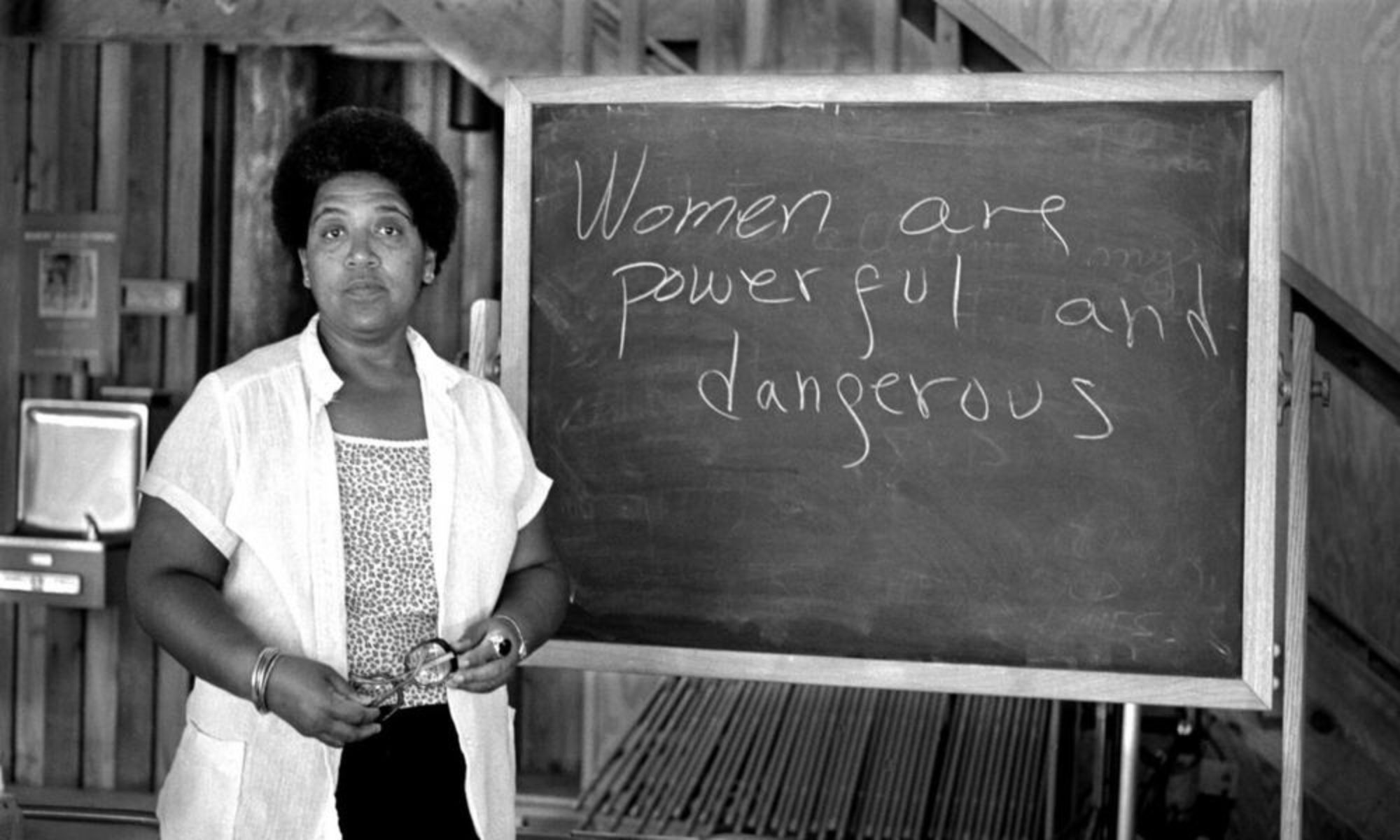

Prof. Gwendolyn Shaw